Financial time series analysis has hit a wall. Traditional databases choke on similarity search. Embedding pipelines fragment across teams. And analysts—the people who actually make investment decisions—still live in Excel.

Vector databases solve the first two problems. Excel integration solves the third.

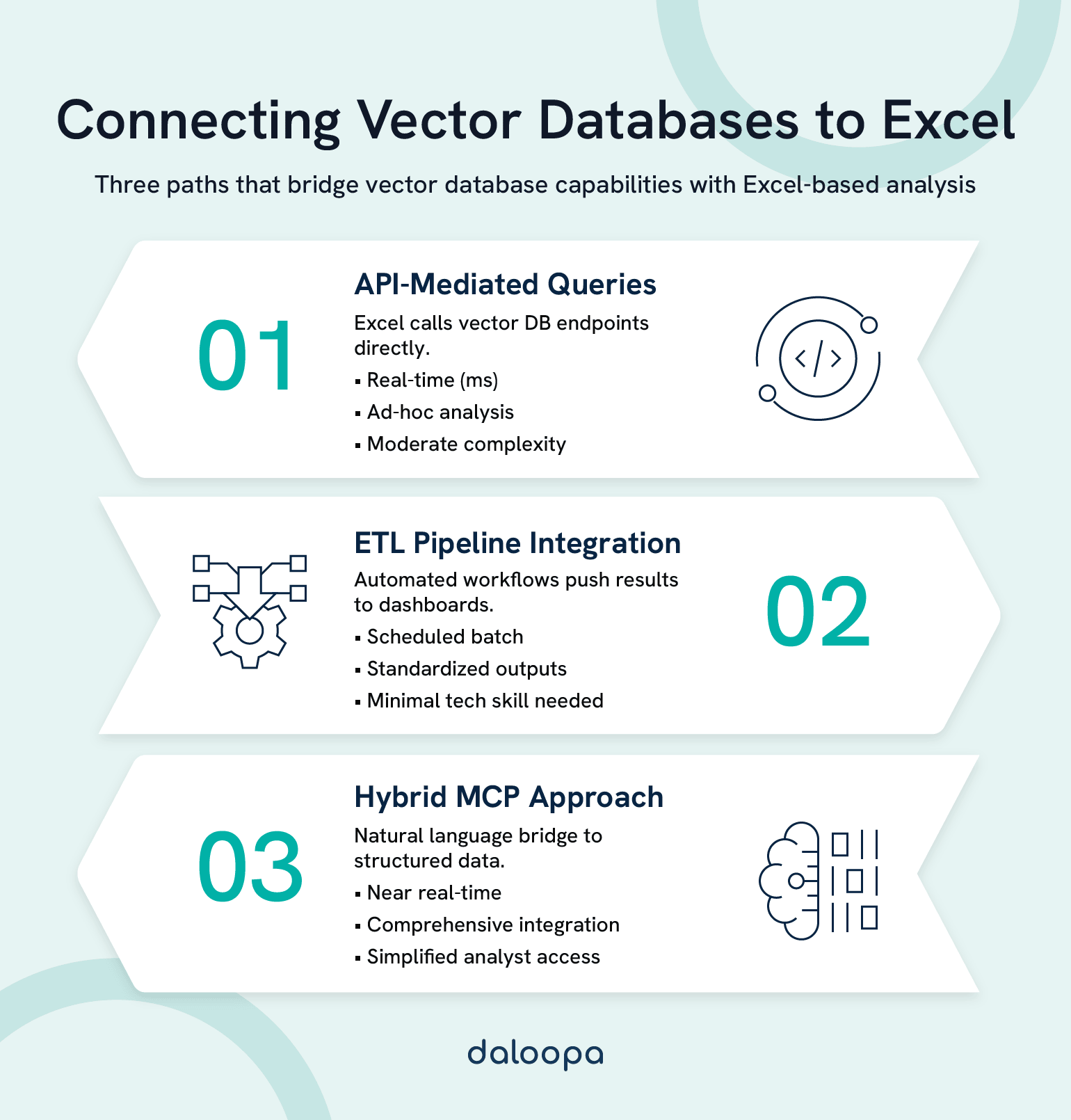

Three proven patterns can make organizational workflows much smoother. API-mediated pipelines let Excel call vector database endpoints directly for real-time similarity queries. Automated ETL workflows handle batch processing, delivering vectorized results to dashboards on schedule. Hybrid MCP approaches bridge structured financial data with natural language interfaces while keeping Excel as the analyst’s home base.

The performance shift is dramatic: portfolio similarity queries drop from 800 milliseconds to under 10. Pattern recognition scales across millions of time series. Anomaly detection runs continuously instead of nightly.

This guide delivers the architectural blueprints, algorithm selection frameworks, and implementation patterns your infrastructure team needs to deploy vector database solutions that analysts will actually use.

Key Takeaways

- Vector databases enable semantic similarity search that traditional relational and time series databases cannot efficiently provide—finding securities that behave like a reference based on learned patterns, not predefined attributes.

- High-impact use cases abound: including portfolio similarity search for non-obvious diversification candidates, vector-based anomaly detection for novel fraud patterns, and historical pattern matching for scenario-grounded forecasting. All of which can allow you to ask interesting questions without spending hours or days doing the data crunching dirty work.

- Start bounded, design for adoption: Prove value with a constrained pilot (portfolio similarity offers clear metrics), then expand based on measured analyst usage alongside technical performance.

Why Vector Databases Matter for Financial Time Series

The Limitations of Traditional Databases for Time Series AI Workloads

The problem isn’t speed—it’s architecture.

A relational database running a portfolio similarity query performs brute-force distance calculations across every candidate vector. With 512-dimensional embeddings spanning 10,000 securities, that’s 5.12 million floating-point operations before any ranking begins. B-tree indexes, built for range queries and exact matches, offer zero acceleration for nearest-neighbor searches in high-dimensional space.

Time series databases handle temporal queries well but lack native approximate nearest neighbor support. The curse of dimensionality compounds the challenge: as embedding dimensions increase, computational costs grow exponentially while distance metrics lose discriminative power.⁴

The practical impact? When portfolio similarity queries take 800ms instead of 8ms, analysts stop running them. When anomaly detection consumes hours of compute, it runs nightly instead of continuously. Infrastructure becomes the bottleneck that shapes—and limits—what analysis is even attempted.

| Capability | Traditional RDBMS | Time Series DB | Vector Database |

| Similarity search | Brute-force only | Limited/none | Native ANN |

| High-dimensional indexing | B-tree (ineffective) | Time-partitioned | HNSW, IVF, PQ |

| Embedding storage | BLOB workaround | Array types | Native vectors |

| Sub-10ms ANN queries | Not achievable | Not achievable | Standard |

What Vector Databases Enable for Financial Analysis

Vector databases store embedding vectors as first-class data types and maintain index structures that enable approximate nearest neighbor queries in logarithmic rather than linear time. For finance, this unlocks three capabilities traditional infrastructure cannot efficiently support.

- Semantic similarity search finds securities that behave like a reference—not based on matching attributes, but on learned behavioral patterns. Query for “securities similar to this mid-cap industrial with defensive characteristics” and get results based on embedded factor exposures, not just sector codes and market cap filters.

- Anomaly detection at scale identifies outliers in high-dimensional transaction spaces without predefined rules. Rather than coding what “suspicious” looks like, vector approaches learn normal behavioral embeddings and flag deviations—catching novel fraud patterns while reducing false positives on unusual-but-legitimate activity.

- Pattern recognition across time series matches current conditions to historical analogues. A 90-day price history embedding captures volatility regimes, momentum characteristics, and factor exposures—enabling queries like “find historical periods that looked like now” for scenario analysis.

The Excel Integration Imperative

Financial analysis infrastructure exists to serve analysts. Analysts live in Excel.

Excel provides flexibility, auditability, and a modeling paradigm matching how financial professionals think. A similarity search capability requiring Python, Jupyter notebooks, or tickets to the data engineering team will see limited adoption. The most sophisticated embedding pipeline delivers zero value if results don’t reach the spreadsheets where portfolio decisions actually happen.

The infrastructure challenge isn’t deploying vector search. It’s connecting that capability to Excel in ways that feel native rather than bolted-on.

Core Technical Concepts: Embeddings, Similarity Search, and Indexing

Vector Embeddings for Financial Time Series

An embedding transforms raw financial data into a dense vector where distance equals similarity. Securities with similar characteristics cluster together; dissimilar ones separate. The embedding becomes a compressed behavioral signature enabling mathematical comparison.

Consider a 256-dimensional embedding of a stock’s 90-day price history. Dimensions 1-64 might encode return distribution characteristics. Dimensions 65-128 capture volatility regime information. Dimensions 129-192 hold momentum and mean-reversion signals. The remaining dimensions represent cross-asset correlation patterns. The neural encoder learns these representations from data—the result is a vector where Euclidean distance corresponds to behavioral similarity.

Three generation approaches serve different needs:

- Pretrained models adapted for finance offer the fastest path to production. Transformer architectures trained on general time series can be fine-tuned on financial datasets with limited proprietary data. The tradeoff: potential domain mismatch where models miss finance-specific patterns.

- Custom encoders deliver superior performance for firms with substantial historical data. Training on years of proprietary trading data produces embeddings tuned to patterns that matter for specific strategies. Significant investment, but defensible where embedding quality directly impacts alpha.

- Hybrid approaches combine learned representations with engineered features. Technical indicators, fundamental ratios, and factor exposures concatenated with neural embeddings ensure important known signals survive the encoding. This pragmatic approach often outperforms pure deep learning in financial contexts.

Dimensionality selection: Higher dimensions (512-1024) capture more nuance but increase storage and latency. Lower dimensions (64-128) query faster but may lose discriminative power. For most financial applications, 256-512 dimensions balance expressiveness against computational overhead.

Similarity Search and Approximate Nearest Neighbor (ANN) Algorithms

Exact nearest neighbor search computes distances to every vector in the database—O(n) complexity that becomes prohibitive at scale. Approximate nearest neighbor algorithms achieve 95%+ recall while reducing complexity to O(log n).²

Two algorithm families dominate financial applications:

- HNSW (Hierarchical Navigable Small World) builds a multi-layer navigable graph connecting vectors to neighbors at multiple hierarchy levels.¹ Queries navigate from coarse upper layers to fine-grained lower layers, rapidly narrowing the search space. HNSW delivers sub-10ms queries with 95%+ accuracy on million-vector datasets. The cost: significantly higher memory requirements than IVF due to graph structure overhead.

- IVF (Inverted File Index) partitions vector space into clusters, searching only relevant clusters at query time.³ IVF handles very large datasets (100M+ vectors) more efficiently than HNSW when latency tolerance exists. Query latency varies significantly based on dataset size and nprobe settings, trading accuracy for speed—higher nprobe values improve recall but increase response time.⁵

| Factor | HNSW Preferred | IVF Preferred |

| Dataset size | Generally < 10M vectors | Generally > 10M vectors |

| Latency requirement | < 10ms | < 100ms acceptable |

| Memory constraints | Sufficient RAM | Memory-limited |

| Update frequency | Frequent updates | Batch updates OK |

| Recall requirement | > 95% | > 90% acceptable |

For real-time portfolio similarity, HNSW is typically right—latency advantage justifies memory cost. For large-scale historical pattern matching or batch anomaly detection, IVF often wins on cost-performance.

Indexing Strategies for Time Series Data

Vector indexes differ fundamentally from traditional database indexes. Rather than organizing data for range queries, they construct navigational structures optimized for nearest-neighbor traversal.

- Temporal partitioning segments vectors by time window—separate indexes per quarter or year. This accelerates time-scoped queries common in backtesting and enables efficient refresh as new data arrives without full rebuilds.

- Metadata filtering combines vector similarity with attribute constraints. “Securities similar to X within technology, market cap above $10B” requires efficient filter integration—applying constraints during rather than after vector search.

- Multi-index strategies maintain separate indexes for different purposes. Short-horizon embeddings (30-day patterns) in one index for trading signals; long-horizon embeddings (2-year patterns) in another for strategic allocation.

Tuning the accuracy-speed tradeoff: HNSW’s ef_construction parameter controls index build quality—higher values (200-400) improve recall at the cost of build time. The ef_search parameter governs query-time accuracy. For financial applications, start with ef_construction=200 and ef_search=100, adjusting based on measured recall against held-out ground truth.

Integration Architecture: Connecting Vector Databases to Excel

Three Integration Patterns to Consider

Three patterns connect Excel to vector databases. Each fits different use cases and organizational contexts.

Pattern 1: API-Mediated Queries — Excel calls vector database APIs directly for on-demand similarity search. Best for ad-hoc queries requiring real-time results. Moderate complexity; requires VBA or Power Query comfort.

Pattern 2: ETL Pipeline Integration — Automated workflows vectorize data, execute scheduled queries, push results to Excel dashboards. Best for batch processing and standardized outputs. Higher complexity; minimal analyst technical skill needed.

Pattern 3: Hybrid MCP Approach — Model Context Protocol creates a standardized layer bridging structured data with vector search through natural language interfaces. Best for comprehensive integration emphasizing analyst accessibility.

| Pattern | Best For | Complexity | Latency | Analyst Skill Required |

| API-Mediated | Ad-hoc queries | Medium | Real-time (ms) | Moderate |

| ETL Pipeline | Batch processing | High | Scheduled | Low |

| Hybrid MCP | Comprehensive integration | Medium | Near real-time | Low |

API-Mediated Integration: Direct Query Patterns

API-mediated integration positions Excel as a client calling vector database endpoints. The flow: Excel → API Layer → Vector Database → JSON Response → Excel Results.

The API layer handles authentication, transforms Excel-friendly inputs (tickers, date ranges, thresholds) into vector queries, and converts JSON responses to tabular formats Excel consumes directly.

VBA approach: Custom functions call REST endpoints via XMLHTTP. An analyst enters a ticker; the function retrieves that security’s embedding, queries for similar vectors, and returns ranked results to adjacent cells. Maximum flexibility, but requires maintenance as APIs evolve.

Power Query approach: Parameterized queries connect to API endpoints with refresh operations re-executing current parameters. Cleaner Excel integration, less real-time interactivity.

Production considerations:

Rate limiting prevents runaway volumes—a misbehaving spreadsheet shouldn’t overwhelm infrastructure. Implement per-user and per-workbook limits at the API layer.

Caching eliminates redundant queries. The same portfolio queried twice in a session returns cached results instantly. Time-based invalidation ensures freshness.

Error handling maintains trust. “Vector database unavailable” beats cryptic failures. Graceful degradation—cached fallbacks during outages—keeps workflows functional.

The Build vs. Buy Calculation. Custom Excel interfaces require ongoing maintenance as APIs evolve, edge cases emerge, and analyst needs shift. Daloopa’s Excel-compatible infrastructure handles the integration complexity—your team invests in analytical workflows rather than middleware maintenance.

ETL Pipeline Integration: Automated Vectorization Workflows

ETL integration removes analysts from the execution path. Automated pipelines handle everything: extraction, embedding generation, vector storage, scheduled queries, result delivery.

The architecture spans systems: Excel/data sources → Extraction → Embedding service → Vector database → Query scheduler → Result aggregation → Excel dashboards.

Pipeline components:

Data extraction pulls from Excel files, databases, and feeds on schedule. Format normalization, missing data handling, and validation occur before downstream processing.

Embedding generation transforms data into vectors. Batch processing enables efficient GPU utilization. Incremental updates process only changed data, reducing daily compute requirements.

Vector ingestion loads embeddings with metadata tagging. Bulk inserts optimize throughput; index refresh scheduling balances performance against ingestion lag.

Scheduled queries execute standardized searches at defined intervals. Results aggregate into analyst-ready formats—ranked lists, exception reports, similarity matrices.

Result delivery pushes outputs to Excel-accessible locations: shared drives, Excel Online workbooks, or dashboard data sources analysts connect to locally.

Automation essentials: End-to-end monitoring tracks pipeline health. Alerting notifies operations of failures before analysts notice missing data. Retry logic with exponential backoff handles transient issues.

Hybrid Approach: Model Context Protocol (MCP) Integration

MCP provides a standardized protocol connecting AI capabilities to data sources. For vector database Excel integration, MCP creates an abstraction enabling simplified queries against vectorized data while maintaining governance.

The flow: Excel → MCP Client → MCP Server → Vector Database + Financial Systems → Unified Response → Excel Output.

Financial workflow benefits:

Preserved Excel primacy — Analysts stay in spreadsheets. Results surface through familiar interfaces.

Natural language accessibility — “Find securities that traded similarly to AAPL during the 2022 drawdown” translates to appropriate vector operations without requiring embedding expertise.

Governance integration — MCP servers enforce access controls, audit queries, and ensure compliance. The protocol layer provides natural security insertion points.

Multi-source unification — A single integration bridges vector databases, traditional databases, and document repositories for unified search.

For teams pursuing hybrid embedding strategies, Daloopa’s MCP implementation provides the structured fundamental data layer—financials, estimates, KPIs—that concatenates with learned representations. Pre-validated data means your engineered features carry signal rather than data quality artifacts.

High-Impact Use Case Examples: Portfolio Optimization, Fraud Detection, and Forecasting

1) Portfolio Optimization and Similarity-Based Security Selection

The problem: Traditional screening filters on observable attributes—sector, market cap, P/E ratio. This misses securities with similar behavioral characteristics but different surfaces. A mid-cap industrial and large-cap technology company might exhibit nearly identical factor exposures, but attribute screening would never surface the relationship.

The vector solution: Embed price histories, fundamentals, and factor exposures into unified vectors. Similarity search finds securities that behave like a reference based on learned patterns, not predefined categories.

Excel integration: An analyst enters a ticker. The integration queries similar securities and returns ranked results with similarity scores and explanatory metadata directly in the spreadsheet.

In practice: An analyst managing a concentrated position facing regulatory uncertainty needs alternatives with similar return characteristics but different exposure. Rather than manually screening sectors, they query for behaviorally similar securities. Vector search surfaces candidates across industries—perhaps a healthcare equipment company and defense contractor both exhibiting similar volatility profiles and factor sensitivities to the original holding.

Outcome: Faster idea generation through semantic search. Non-obvious diversification candidates discovered. Concentration risk reduced through identification of true behavioral exposure rather than categorical proxies.

2) Fraud Detection and Transaction Anomaly Identification

The problem: Rule-based detection captures known patterns but misses novel schemes. Fraudsters adapt; static rules don’t. Manual review doesn’t scale, and aggressive thresholds generate false positives that erode trust.

The vector solution: Embed transaction sequences capturing timing, amounts, counterparties, and velocity. Normal transactions cluster in vector space; anomalies appear as outliers distant from established norms.

Excel integration: Anomaly scores and flagged transactions flow to analyst dashboards. Each flag includes transaction details, anomaly score, and nearest normal transactions for comparison—enabling rapid assessment.

In practice: A corporate payment exhibits unusual patterns triggering no existing rules. Vector detection identifies it as distant from established payment clusters. The flag reaches an analyst’s dashboard: “This transaction’s embedding is 3.2 standard deviations from normal patterns for this counterparty. Nearest normal transaction shown for comparison.”

Outcome: Earlier detection through pattern-based identification. Reduced false positives distinguishing unusual-but-normal from genuinely anomalous. Auditable trails with quantified metrics.

3) Time Series Forecasting and Pattern Matching

The problem: Forecasting models struggle with regime changes. Models trained on low-volatility data produce unreliable forecasts when volatility spikes. Historical analogues exist—similar conditions have occurred—but manual identification across years of data is impractical.

The vector solution: Embed historical periods into vectors capturing characteristic patterns. Query current conditions against this index to identify analogues. Use matched outcomes to inform probabilistic forecasting.

Excel integration: Pattern match results feed Excel forecasting models. Analysts receive ranked historical analogues with similarity scores for scenario analysis grounded in actual outcomes.

In practice: Current conditions show unusual characteristics—elevated volatility, inverted yield curve, specific sector rotation. An analyst queries the historical database. Results: “Current 90-day embedding most similar to Q4 2018 (0.89 similarity), Q3 2015 (0.84), Q1 2008 (0.81).” The analyst examines subsequent outcomes from matched periods to inform projections.

Outcome: Forecasts grounded in historical precedent. Faster identification of relevant analogues. Improved scenario planning with quantified similarity to past conditions.

For deeper guidance on combining vector search with retrieval-augmented generation read our guide on RAG Systems for Financial Tables.

Implementation Best Practices and Performance Optimization

Query Performance Tuning for Financial Workloads

Default settings rarely optimize for financial workloads, which prioritize low latency and high recall over raw throughput.

Index parameters:

For HNSW, ef_construction controls build-time quality. Values of 200-400 produce better graph connectivity and recall at the cost of longer builds. Index construction happens once; queries run continuously—err toward quality.

The M parameter controls connections per node. Higher values (16-64) improve recall but increase memory and latency. Start with M=32; adjust based on measured performance.

Query parameters:

ef_search (HNSW) or nprobe (IVF) controls query-time accuracy. For real-time trading requiring sub-10ms response, start with ef_search=50. For batch queries tolerating 100ms, ef_search=200 maximizes accuracy.

Batch vs. single queries: Single queries optimize latency; batch queries optimize throughput. Interactive Excel use cases favor single queries. Scheduled pipelines benefit from batching.

Target latencies:

| Use Case | Target | Acceptable |

| Real-time trading signals | < 5ms | < 15ms |

| Interactive analyst queries | < 50ms | < 200ms |

| Batch anomaly detection | < 500ms/query | < 2s/query |

| Historical pattern matching | < 1s | < 5s |

Monitoring: Track p50, p95, p99 latency. Alert on p95 degradation before it impacts median performance. Monitor recall against ground truth periodically to catch index degradation.

Scaling Strategies for Growing Data Volumes

Financial time series accumulate continuously. A single equity’s daily history grows by approximately 250 vectors annually (reflecting ~251 trading days per year). Across 5,000 securities with multiple embedding types and granularities, growth compounds rapidly.

- Index refresh: Full rebuilds become impractical at scale. Incremental updates—inserting new vectors into existing graphs—maintain performance without rebuild overhead. Schedule optimization passes during low-usage windows.

- Partitioning: Time-based partitioning (separate indexes per quarter/year) enables efficient historical queries and simplifies lifecycle management. Older partitions move to cost-optimized storage; recent partitions stay on premium infrastructure.

- Tiered architecture: Hot data (recent quarters) on memory-optimized instances for lowest latency. Warm data (historical years) on cost-optimized storage with acceptable latency tradeoffs. Route queries based on requested time range.

Designing Excel Interfaces for Non-Technical Users

Analyst adoption determines whether vector database investment generates returns.

- Minimize inputs. The ideal interface requires one input (reference security) and delivers useful results. Every additional required parameter reduces adoption. Hide advanced options until needed.

- Maximize useful outputs. “MSFT is 0.87 similar to AAPL” teaches less than “MSFT is 0.87 similar to AAPL, primarily due to shared momentum characteristics and factor exposures.” Context enables action.

- Clear error messaging. “No securities found above 0.7 threshold—try reducing threshold or broadening criteria” beats “Query failed.”

- Graceful degradation. When infrastructure is unavailable, return cached results with freshness indicators rather than failing. Let analysts decide if stale results suffice.

Training focus: Interpretation over mechanics. Analysts need to understand what similarity scores mean and how to incorporate results—not HNSW graph traversal details.

For foundational guidance on structuring data for AI workloads, see Financial Database Design: Tabular Examples for AI-Ready Data Architecture.

Future Directions: Emerging Capabilities and Strategic Considerations

Advances in Vector Database Technology

Improved hybrid search combines vector similarity with keyword matching and metadata filtering in unified queries. Rather than vector search followed by filtering, hybrid approaches integrate constraints into optimized query plans—reducing latency when filters are restrictive.

Real-time indexing reduces lag between ingestion and availability. For trading applications where embedding freshness matters, near-instantaneous updates enable queries against just-generated vectors rather than waiting for batch refreshes.

Multimodal embeddings encode multiple data types—time series, text, images—into unified spaces. Embed earnings call transcripts alongside price histories for queries like “securities with similar price patterns AND similar management commentary sentiment.”

The Path Forward: Building AI-Native Financial Infrastructure

Vector databases represent infrastructure investment, not point solutions.

Start bounded. Portfolio similarity offers clear value, measurable outcomes, and limited scope. Prove value with a constrained pilot before enterprise rollout.

Invest in data quality. Vector search quality depends on embedding quality, which depends on input data quality. Subtle failures (poor similarity results) are harder to diagnose than obvious ones (query errors). Robust validation and quality monitoring are prerequisites.

Design for adoption. Infrastructure that analysts don’t use generates no value. Involve end users in interface design. Prioritize Excel integration over dashboard proliferation. Measure adoption alongside technical metrics.

Vector databases for financial time series analysis are becoming foundational for AI-native workflows—essential capability for organizations building analytical competitive advantage.

Getting Started with Vector Database Integration

Vector databases transform what’s possible in financial time series analysis—semantic similarity search, scalable anomaly detection, and pattern recognition across millions of data points become practical operations rather than theoretical capabilities. But technology alone doesn’t create value. Excel integration makes these capabilities accessible to the analysts who drive decisions; without it, even the most sophisticated infrastructure sits idle.

The path forward depends on your context. API-mediated integration suits teams with moderate technical capability seeking real-time queries. ETL pipelines serve organizations prioritizing standardized outputs with minimal analyst burden. Hybrid MCP approaches offer the most accessible path for comprehensive integration while maintaining governance.

Start with a bounded proof-of-concept—portfolio similarity across a defined universe provides clear success metrics and manageable scope. Validate latency, result quality, and actual analyst usage before expanding. Technical infrastructure is only as valuable as its adoption.

For teams ready to accelerate vector database integration with production-ready financial data infrastructure, explore how Daloopa’s API enables seamless Excel compatibility with structured financial data. For broader AI integration requirements, Daloopa’s MCP implementation connects financial data systems to AI-native tools through standardized protocols, bridging vector-powered search with the structured data pipelines that power financial analysis.

References

- Malkov, Yu. A., and D. A. Yashunin. “Efficient and Robust Approximate Nearest Neighbor Search Using Hierarchical Navigable Small World Graphs.” arXiv, 30 Mar. 2016.

- Aumüller, Martin, Erik Bernhardsson, and Alexander Faithfull. “ANN-Benchmarks: A Benchmarking Tool for Approximate Nearest Neighbor Algorithms.” ANN-Benchmarks, 2021.

- “Faiss Indexes.” Facebook AI Research, GitHub, 29 July 2025.

- Sanghoon Na, and Haizhao Yang. “Curse of Dimensionality in Neural Network Optimization.” arXiv, 24 June 2025.

- Accelerated Vector Search: Approximating with NVIDIA cuVS Inverted Index.” NVIDIA Technical Blog, 7 Nov. 2024.