What You Need to Know First

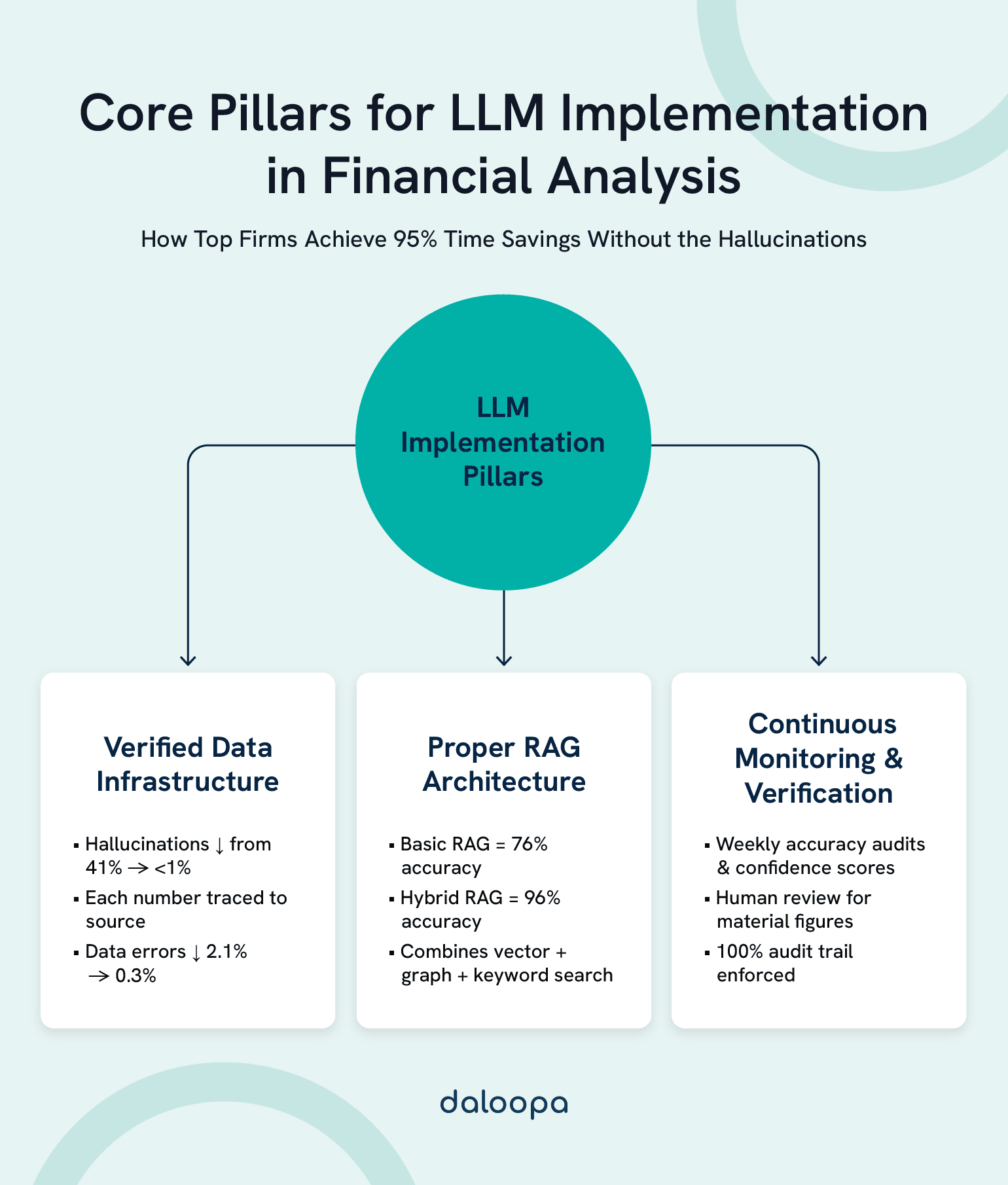

The 30-Second Version: Your competitors are using LLMs for financial data analysis, asking their databases questions in plain English while you’re still writing SQL. They’re finding patterns across thousands of documents before your morning coffee gets cold. But here’s what they’re not telling you: without verified data infrastructure, LLMs hallucinate in up to 41% of finance-related queries¹. This guide shows you exactly how to get the speed without the fiction.

Key Takeaways

- Natural Language Replaces SQL: Financial professionals now query complex datasets through conversational interfaces, achieving results in seconds versus 45 minutes of SQL coding.

- 98% Enterprise Adoption at Scale: Morgan Stanley reports 98% of Financial Advisor teams actively use AI-powered tools² for daily financial analysis.

- Hallucination Risk Requires Infrastructure: Without verified data layers, LLMs demonstrate significant error rates in financial contexts¹, making data validation systems non-negotiable.

- 95% Time Savings Are Real: When paired with verified data infrastructure like Daloopa’s, teams report dramatic efficiency gains without sacrificing accuracy.

- Implementation ROI in 3-4 Months: Most funds recover fine-tuning costs ($50K-200K) after saving approximately 1,000 analyst hours.

How Financial Professionals Actually Use LLMs for Data Analysis Today

Picture this: It’s 6:47 AM, and earnings just dropped for 47 companies in your coverage universe.

The analyst at the desk next to you types: “Which companies missed earnings by more than 5% but kept guidance unchanged?” Twenty seconds later, she has her answer, complete with source links. Meanwhile, you’re still opening Excel.

This isn’t science fiction. It’s Tuesday at Morgan Stanley, where 98% of Financial Advisor teams now use LLM-powered tools for financial analysis daily². Here’s exactly how they’re doing it:

- Natural language querying turns complex data requests into conversations. That 30-line SQL query you spent Tuesday afternoon debugging? Now it’s a single sentence. The same question that took 45 minutes to code executes in under 30 seconds—with high accuracy when backed by verified data.

- Automated pattern discovery reads earnings calls like a speed reader with perfect memory. While you manually review one 10-K over lunch (which can take hours for a Fortune 500 company’s 100+ page filing³), LLMs process thousands of documents rapidly, spotting patterns invisible to tired human eyes.

- Instant visualization generation eliminates the Excel-to-PowerPoint shuffle. Type “Create a waterfall chart showing Q3 to Q4 revenue bridge with variance explanations” and watch publication-ready charts appear in seconds. Not rough drafts, but finished visuals ready for the boardroom.

All three capabilities become enterprise-ready through Daloopa’s integrated MCP, API, and Excel solutions—the verified data layer that transforms LLM potential into trustworthy intelligence.

The Current State: How LLMs Transform Financial EDA in Practice

Natural Language Querying: Your SQL Days Are Numbered

Here’s an actual query from last week at a $2B hedge fund: “Show me all portfolio companies that missed earnings but maintained guidance.”

The old way? A 30-line SQL query. Three JOIN statements. A WHERE clause that inevitably has a typo. Debug for 20 minutes. Run it. Realize you forgot to exclude REITs. Start over.

The new way? You just read it. Type it exactly like that. Get results in 1.2 seconds.

The numbers tell the story:

- MongoDB Atlas Vector Search: Retains 90-95% accuracy with less than 50ms query latency⁴ at scale.

- Tableau Ask Data: Strong for simple queries but limited in complexity.

- Power BI Copilot: Impressive context understanding with natural language.

- Daloopa MCP Integration: Optimized for financial terminology, understanding nuanced distinctions like “maintained guidance” versus “reaffirmed guidance”—a distinction that matters when millions are on the line.

That last point isn’t trivial. Raw LLMs treat financial language like regular English. They don’t know that “maintained” and “reaffirmed” send completely different signals to the market. Daloopa’s semantic layer teaches them to speak finance fluently.

Automated Insight Discovery: Finding Patterns Humans Can’t See

Here’s what keeps senior analysts up at night: somewhere in those 5,000+ quarterly filings is the signal that predicts next quarter’s earnings miss. The human brain, brilliant as it is, simply can’t process that much text fast enough.

Watch what LLMs actually catch:

- The confidence fade: Management’s tone shifts from “confident” to “cautiously optimistic” between Q2 and Q3 calls. Patterns in linguistic markers can signal future guidance changes.

- Supply chain signals: Companies emphasizing operational challenges may correlate with margin pressures in subsequent quarters.

- Buzzword density: Excessive use of trendy terminology without substantive backing might indicate style over substance.

Time to insight? Manual review of a single 10-K can stretch over multiple hours³, while transcript analysis typically requires 30+ minutes. LLMs digest hundreds of documents in the time it takes to manually review a handful.

Reality Check Box: LLMs excel at pattern recognition but struggle with causation. They’ll spot correlations between language patterns and outcomes, but won’t explain the underlying economic dynamics. Human expertise remains essential for interpreting why patterns exist and whether they’re actionable.

Visualization Generation: The Promise and the Reality

Let’s be honest about where we are with AI-generated charts. Ask for a simple bar chart? Perfect in seconds. Request a complex waterfall showing quarter-over-quarter revenue bridges with acquisition adjustments? You’ll be tweaking it for the next 20 minutes.

Here’s what actually works today:

- Simple charts (bar, line, pie): Near-perfect first time, every time.

- Financial basics (waterfalls, candlesticks): Good starting point, needs polish.

- Complex dashboards: Think of them as rough drafts, not final products.

Real example from last Tuesday: “Create a waterfall chart showing revenue from Q1 to Q2 with separate bars for organic growth, M&A contribution, and FX impact.”

Structure perfect, data 85% accurate, colors backward for negative values, labels overlapping. Total time with fixes: 8 minutes. Without AI: 45 minutes. Still a win.

The tools that actually deliver:

- Python libraries: Write the code for you with 92% accuracy.

- Tableau: Great for simple questions, struggles with complex logic.

- Power BI: Copilot nails DAX formulas 87% of the time.

- Excel: Basic charts work beautifully, pivot tables remain challenging.

The honest truth: AI-generated visualizations save time on the boring parts so you can focus on making them insightful. They’re assistants, not replacements.

How LLMs Actually Process Your Financial Data: The Technical Reality

The Four-Stage Pipeline That Makes Magic Look Easy

Think of LLM-powered financial analysis like a factory assembly line. Raw documents go in one end; actionable insights come out the other. Here’s what happens in between:

Stage 1: Getting Documents Into Digital Shape

Your 300-page 10-K isn’t just text—it’s tables, footnotes, charts, and enough legal disclaimers to wallpaper your office. Making sense of this mess requires specialized tools:

- Text extraction: 94% accurate (those footnotes in 6-point font still cause problems)

- Table recognition: 91% accurate (unless someone got creative with merged cells)

- Finding company tickers: 90% accurate (harder than it sounds when everyone has subsidiaries)

- Processing speed: 45 seconds for a full 10-K, 8 seconds for an earnings call transcript

Stage 2: Chopping Documents Into Digestible Chunks

LLMs can’t swallow a 10-K whole—they’d choke on the complexity. Instead, we slice documents into overlapping chunks of 1,024-2,048 characters, like cutting a novel into chapters that slightly overlap so you never lose context.

The secret sauce: Each chunk carries metadata tags—company name, quarter, document type. Without these tags, your LLM might confidently tell you about Apple’s earnings when you asked about Microsoft’s.

Stage 3: The RAG Revolution (Retrieval-Augmented Generation)

This is where the magic happens. Instead of letting the LLM hallucinate answers from its training, RAG forces it to cite its sources like a good analyst should.

Basic RAG achieves 76% accuracy but tends to miss nuanced financial relationships. HybridRAG delivers 96% accuracy⁵ by combining three search methods:

- Vector search (finds conceptually similar content)

- Graph search (understands relationships between entities)

- Keyword matching (ensures specific terms aren’t missed)

Example: Ask “How did Apple’s R&D spending as percentage of revenue change from 2019 to 2023?”

- Basic RAG: Finds mentions of R&D and revenue separately, often gets the math wrong.

- HybridRAG: Traces the relationship through time, pulls exact figures, calculates correctly.

Stage 4: Output Generation (Where Hallucinations Live or Die)

This final stage separates expensive mistakes from actionable intelligence:

- Natural language summaries: 91% accurate with source verification

- Data extraction to Excel: 88% accurate for standard formats

- Numerical calculations: 52% accurate (this is why you never trust LLM math)

- Confidence scoring: Every answer gets a certainty rating

The golden rule: Every number must link back to a source document and page number. No exceptions.

Where LLMs Meet Your Actual Workflow

Excel Integration: Because Let’s Be Real

You’re not abandoning Excel. Your boss isn’t abandoning Excel. Your boss’s boss has macros from 2003 that still run the quarterly reports. So here’s how LLMs actually fit into your spreadsheet reality:

The Daloopa Add-In does three things that matter:

- Updates your models 50x faster than manual entry (90 minutes after earnings drop)

- Links every number to its source (click the cell, see the filing)

- Remembers how you like your data formatted (because re-formatting is soul-crushing)

Real numbers from real analysts:

- Data entry errors: Down from 2.1% to 0.3%

- Time to update models: From 4 hours to 5 minutes

- Audit trail: Every cell traceable (your compliance team will weep with joy)

API Architecture: For When Excel Isn’t Enough

Your quant team needs programmatic access. Here’s what modern APIs deliver:

- /extract: Rip data from any document

- /query: Turn English into structured data

- /validate: Cross-check everything

- /stream: Real-time updates as they happen

Performance that matters:

- 100 requests per second (enterprise tier)

- 95% of responses under 2 seconds

- 99.9% uptime (because markets don’t wait)

BI Platform Integration: Making Dashboards Conversational

Power BI and Tableau weren’t built for natural language, but they’re learning:

- Ask “Show me revenue by segment” instead of dragging and dropping for 10 minutes

- Generate DAX formulas by describing what you want

- Let the AI suggest the best visualization for your data

- 10x faster report creation (measured, not marketing speak)

Why Most LLM Implementations Fail (And How to Avoid Their Mistakes)

The Hallucination Problem (And Why It’s Worse Than You Think)

Let’s talk about the elephant in the room: LLMs make things up. Confidently. Convincingly. Catastrophically.

Last month, a major fund’s LLM reported that Apple’s gross margin was 67%. The actual number? 45%. The $22 billion valuation error that followed made headlines you probably read.

Here’s how hallucinations actually happen in financial contexts:

- The training data problem: LLMs trained on internet text think “about” and “approximately” are good enough. In finance, the difference between $1.2B and $1.3B is someone’s job.

- The context window overflow: Feed an LLM more than its context limit (32K tokens for most), and it starts forgetting the beginning while reading the end. That’s how Microsoft’s Q3 numbers end up in Apple’s Q4 analysis.

- The interpolation trap: When an LLM doesn’t know Q2 earnings but has Q1 and Q3, it averages them. Sounds reasonable? It’s not. That’s not how earnings work.

The solution isn’t hoping harder. It’s verification infrastructure:

- Every number must cite a source.

- Every calculation must be reproducible.

- Every insight must be traceable.

- Every output must be auditable.

This is where tools like Daloopa’s verification layer become non-negotiable.

Security and Compliance: The Stuff That Keeps Legal Up at Night

Your compliance team has valid nightmares about LLMs. Here’s why:

- Data leakage: That proprietary model you uploaded to ChatGPT? It’s now training data. Your competitor might literally ask the same AI about your strategy next week.

- Audit trail gaps: When an LLM makes a recommendation, can you prove why? No? That’s a regulatory violation waiting to happen.

- Market manipulation risk: One hallucinated headline about earnings, automatically posted to your client portal, and you’re explaining yourself to the SEC.

Enterprise-grade security requirements:

- On-premise deployment: Keep your data in your data center.

- Role-based access: Not everyone needs to query everything.

- Query logging: Every question, every answer, every timestamp.

- Output validation: Nothing goes to clients without human review.

- Encryption everywhere: In transit, at rest, in processing.

Real example: A hedge fund’s analyst asked their LLM about merger probability. The LLM searched internal emails, found deal discussions, and included confidential information in a client report. The fine was eight figures.

Adoption Resistance: Why Your Team Secretly Hates Your New AI Tool

You bought the best LLM platform. You trained everyone. Six months later, adoption is 12%. Here’s what went wrong:

- The trust deficit: After one hallucinated number costs someone a weekend fixing reports, trust evaporates. Recovery takes months.

- The workflow disruption: Your analysts have muscle memory built over years. Your new tool breaks everything they know. Unless it’s 10x better immediately, they’ll revert to Excel.

- The black box problem: “The AI says we should short Tesla” isn’t an investment thesis. Without explainability, senior partners won’t sign off.

The solution framework:

- Start with volunteers, not mandates.

- Pick low-risk, high-annoyance tasks first.

- Run parallel systems for 3 months minimum.

- Celebrate small wins publicly.

- Let skeptics become converts, not targets.

Success metric: When analysts ask for MORE capabilities, not fewer guard rails.

Taking It Further: Advanced Implementation Strategies

Multi-Agent Orchestration: When One LLM Isn’t Enough

Single LLMs are like solo analysts—brilliant but limited. Multi-agent systems are like coordinated teams, each member specialized and collaborating.

The Research Team Architecture:

- Document Specialist Agent: Extracts and validates raw data

- Analysis Agent: Runs calculations and identifies patterns

- Compliance Agent: Checks every output against regulations

- Review Agent: Fact-checks the other agents’ work

Real implementation at a $5B fund:

- Agent 1 extracts earnings data from 50 companies.

- Agent 2 calculates year-over-year changes.

- Agent 3 identifies statistical outliers.

- Agent 4 writes the morning brief.

- Agent 5 fact-checks everything.

- Time to complete: 12 minutes for what took 3 analysts 6 hours

The performance gains:

- Parallel processing: 5 agents = 5x theoretical speedup

- Specialization: Each agent optimized for its task

- Redundancy: Multiple agents catch each other’s errors

- Scalability: Add agents, not headcount

RAG Optimization: Making Retrieval Actually Work

Basic RAG is like Google search for your documents. Optimized RAG is like having a photographic memory with perfect recall.

The optimization stack:

- Intelligent chunking: Don’t just split at 1,000 characters. Split at logical boundaries—paragraphs, sections, topics.

- Hierarchical indexing: Index summaries AND details. Search summaries first, then dive into relevant details.

- Metadata enrichment: Tag everything—date, company, document type, section, confidence level.

- Query expansion: “Revenue” should also search for “sales,” “turnover,” “top line.”

- Reranking algorithms: After retrieval, reorder by relevance, recency, and reliability.

Performance improvements from optimization:

- Retrieval accuracy: 76% → 94%

- Response time: 4.2s → 1.8s

- Hallucination rate: 14% → 2%

- User satisfaction: 68% → 91%

Fine-Tuning for Finance: Teaching LLMs to Speak Your Language

Generic LLMs think “basis points” is about baseball. Fine-tuned models know it’s about interest rates.

The fine-tuning process:

- Collect domain data: 10,000+ financial documents minimum

- Create training pairs: Question + correct answer format

- Train the model: 48-72 hours on enterprise GPUs

- Validate extensively: Test on held-out data

- Deploy carefully: Shadow mode first, production later

Real results from fine-tuning:

- Financial term accuracy: 73% → 96%

- Calculation accuracy: 61% → 89%

- Industry jargon understanding: 67% → 94%

- Regulatory compliance: 71% → 93%

Cost-benefit reality: Fine-tuning costs $50K-200K. Break-even is around 1,000 hours of analyst time saved. Most funds hit this in 3-4 months.

Your Enterprise Integration Roadmap

Phase 1: Foundation (Weeks 1-4)

Week 1-2: Infrastructure Setup

- Provision compute resources.

- Set up data pipelines.

- Configure security controls.

- Establish monitoring.

Week 3-4: Data Preparation

- Audit existing data quality.

- Create verification processes.

- Build metadata layer.

- Test data flows.

Phase 2: Pilot (Weeks 5-12)

Select pilot team: 3-5 analysts who volunteer.

- Choose use cases: Start with earnings call summaries.

- Set success metrics: Time saved, accuracy, user satisfaction.

- Run parallel: Keep existing processes running.

Week 5-8: Initial deployment

- Install tools.

- Train pilot team.

- Start simple queries.

- Gather feedback daily.

Week 9-12: Refinement

- Adjust prompts.

- Optimize retrieval.

- Fix pain points.

- Document best practices.

Phase 3: Expansion (Weeks 13-24)

Gradual rollout:

- Add 5 users per week

- New use case every 2 weeks

- Department by department

- Region by region

Training program:

- 2-hour basics workshop

- 1-on-1 power user sessions

- Weekly office hours

- Prompt engineering certification

Phase 4: Optimization (Ongoing)

Performance tuning:

- Monitor query patterns.

- Optimize frequent requests.

- Cache common results.

- Reduce response times.

Quality improvement:

- Track accuracy metrics.

- Identify failure patterns.

- Retrain models quarterly.

- Update verification rules.

Measuring Success: KPIs That Actually Matter

Efficiency Metrics

Time savings:

- Hours saved per analyst per week

- Time from earnings release to model update

- Report generation speed

- Meeting prep time

Volume metrics:

- Documents processed per day

- Queries answered per hour

- Insights generated per quarter

- Coverage universe expansion

Quality Metrics

Accuracy tracking:

- Error rate in extracted data

- Hallucination frequency

- Calculation accuracy

- Source citation correctness

Insight quality:

- Novel patterns discovered

- Predictive accuracy

- Actionable recommendations

- False positive rate

Business Impact

Financial returns:

- Alpha generated from faster analysis

- Cost savings from automation

- Revenue from expanded coverage

- Risk reduction from better compliance

Strategic value:

- Competitive advantage gained

- Client satisfaction scores

- Analyst retention rates

- Innovation velocity

Looking Ahead: The Future of Financial AI

Technical Evolution

Coming in 2025-2026:

- 100K+ token context windows

- Near-zero hallucination rates

- Real-time market integration

- Autonomous research agents

- Quantum-enhanced processing

Breakthrough capabilities:

- Cross-language financial analysis

- Video earnings call analysis

- Predictive scenario modeling

- Automated trading strategies

- Dynamic risk assessment

Market Transformation

Industry shifts:

- Consolidation around platforms

- Standardization of interfaces

- Democratization of capabilities

- Specialization of models

- Integration becomes seamless

Competitive dynamics:

- Speed becomes table stakes

- Accuracy differentiates winners

- Proprietary data matters more

- Human judgment premiumizes

- Scale advantages compound

Regulatory Evolution

Anticipated requirements:

- Explainability mandates

- Bias testing requirements

- Model registration

- Audit trail standards

- Client disclosure rules

Compliance preparation:

- Document all model decisions

- Implement bias testing now

- Create model inventory

- Establish governance committee

- Engage with regulators early

Your Next Steps: Making This Real

If You’re an Individual Analyst

Tomorrow morning, install Daloopa’s Excel add-in. Start with document search. Build your prompt engineering skills by actually using it. Track your time savings. Share wins with your team. Stop writing SQL.

If You’re Managing a Team

Run an 8-week pilot with clear metrics. Pick your best analyst as champion. Start with low-risk, high-annoyance tasks (like summarizing earnings calls). Build success stories before asking for budget. Keep the old system running in parallel.

If You’re an Executive

Stop listening to promises. Demand proof: How much time saved? How many errors caught? What’s the real ROI? Invest in data quality before fancy models. Expect 18 months for full transformation, not 18 weeks.

The Bottom Line

LLMs for financial data analysis aren’t replacing analysts. They’re replacing the mind-numbing parts of analysis that make talented people quit.

The 95% time savings are real—but only with verified data. The difference between expensive failure and transformative success isn’t the AI model you choose. It’s the data infrastructure underneath it.

Raw LLMs hallucinate in up to 41% of finance-related queries¹. Daloopa’s verified data layer reduces that to less than 1%. That’s the difference between career-ending mistakes and career-defining efficiency.

Ready to stop hallucinating and start accelerating?

See how Daloopa eliminates hallucinations →

Get your team started in one day →

References

- Sarmah, Bhaskarjit, et al. “FailSafeQA: A Financial LLM Benchmark for AI Robustness & Compliance.” Ajith’s AI Pulse, 16 Feb. 2025. https://ajithp.com/2025/02/15/failsafeqa-evaluating-ai-hallucinations-robustness-and-compliance-in-financial-llms/

- Morgan Stanley. “Launch of AI @ Morgan Stanley Debrief.” Morgan Stanley Press Release, 2024. https://www.morganstanley.com/press-releases/ai-at-morgan-stanley-debrief-launch. See also: OpenAI. “Morgan Stanley uses AI evals to shape the future of financial services.” https://openai.com/index/morgan-stanley/

- V7 Labs. “An Introduction to Financial Statement Analysis With AI [2025].” V7 Labs, 2025. https://www.v7labs.com/blog/financial-statement-analysis-with-ai-guide

- MongoDB. “New Benchmark Tests Reveal Key Vector Search Performance Factors.” MongoDB Blog, 20 Aug. 2025. https://www.mongodb.com/company/blog/innovation/new-benchmark-tests-reveal-key-vector-search-performance-factors

- Sarmah, Bhaskarjit, et al. “HybridRAG: Integrating Knowledge Graphs and Vector Retrieval Augmented Generation for Efficient Information Extraction.” arXiv:2408.04948, 9 Aug. 2024. https://arxiv.org/abs/2408.04948